Image: Ulrich Buckenlei | XR Stager Newsroom

𝗧𝗛𝗜𝗦 𝗪𝗘𝗘𝗞 𝗜𝗡 𝗧𝗘𝗖𝗛 – Welcome to the Technovation Highlights for the week of June 9–15, 2025, presented by the XR Stager Online Magazine

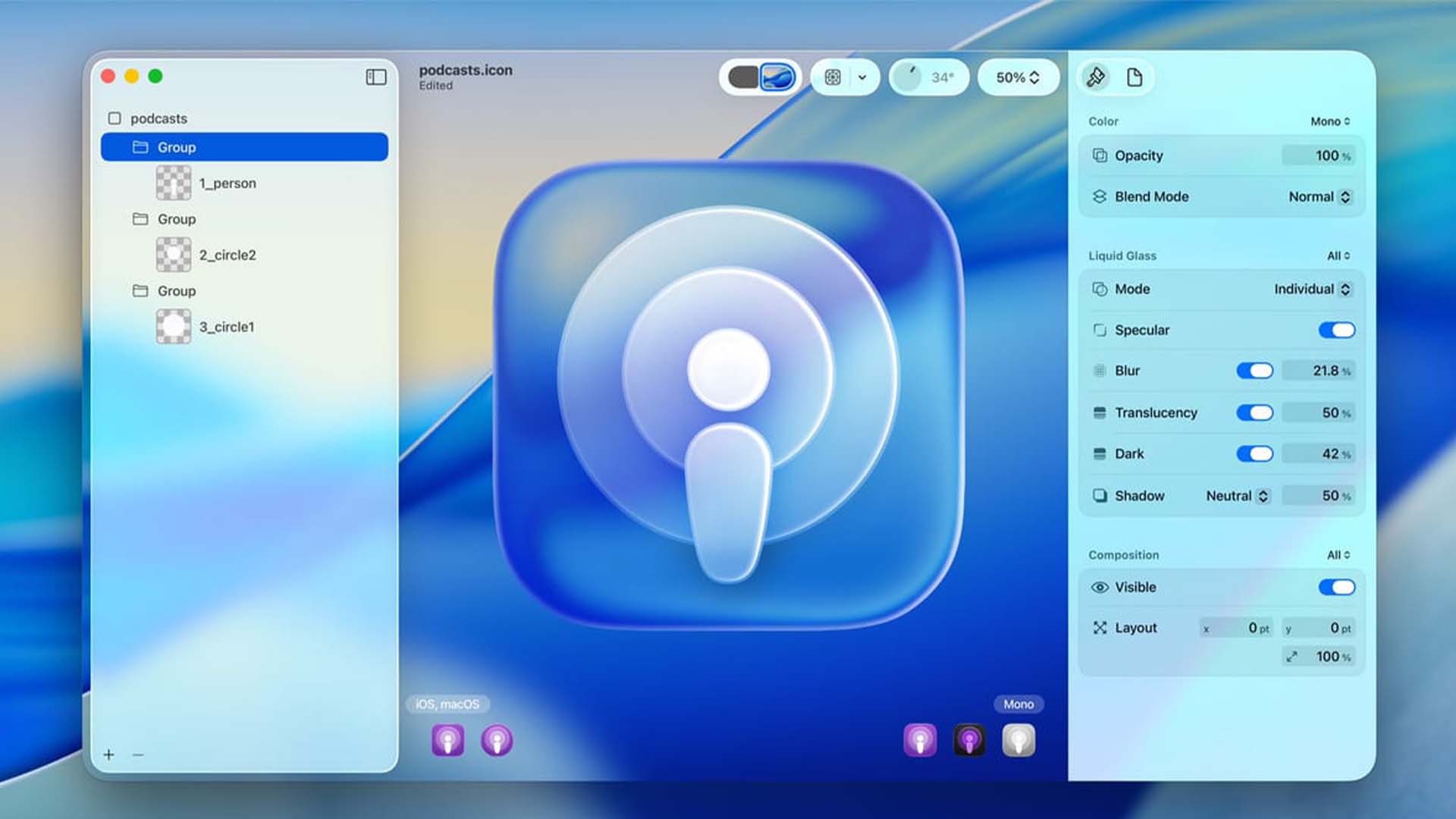

Apple WWDC 2025 – visionOS4 introduces AI widgets and Liquid Glass UI

With WWDC 2025, Apple has opened a new chapter in the convergence of artificial intelligence and spatial computing during calendar week 24. The highlight was the new “Apple Intelligence” system, which brings personalized AI functions across iPhone, iPad, and Mac – including contextual text generation, semantic search, and voice-controlled image editing. What makes it unique: Apple combines local intelligence with on-device security and a strong focus on privacy. Especially notable was the integration of this system into existing apps like Mail, Notes, and Pages – all within a consistent UI design typical of Apple.

On the hardware side, the role of the Vision Pro headset was further emphasized. While a new version wasn’t announced, iPad apps now receive native support on visionOS, and new APIs enable extended interactions with spatial elements. Apple also introduced developer tools allowing AI models to be integrated directly into Xcode with native Vision Pro interfaces – a clear signal toward immersive and intelligent app development. This opens strategic opportunities especially for XR software developers.

Additionally, Apple announced a close collaboration with OpenAI to embed selected GPT features seamlessly into Siri and other system services. Apple remains consistent in its approach: data processing remains under user control, all AI functions are optional and can be locally disabled by default. This positions Apple not only as a hardware provider but also as a platform for responsible, user-centric AI in spatial contexts – with a focus on privacy, UX, and system integration.

- Liquid Glass UI: Transparent, reactive interfaces

- AI-powered system widgets

- Focus on spatial interactions

SOURCE: Ulrich Buckenlei (Digital Analyst)

WWDC 2025: visionOS 4 transforms XR interaction with AI widgets and new interfaces.

Image source: Apple

NVIDIA JUPITER – Europe’s fastest AI supercomputer launches

In week 24, the official launch of JUPITER – one of Europe’s most ambitious AI supercomputing projects – marked a major milestone in global tech development. Developed by Forschungszentrum Jülich in cooperation with NVIDIA, ParTec, and Eviden, the system combines cutting-edge NVIDIA Grace Hopper chips with a dedicated infrastructure for large language models (LLMs), simulation research, and industrial AI applications.

JUPITER operates with more than one ExaFLOP of computing power and is designed to process massive datasets in real time. This makes it suitable not only for scientific climate models, medical protein analyses, or traffic optimization, but especially for the development of European foundation models – in areas like medicine, energy transition, or AI safety. Startups and SMEs are also expected to benefit through dedicated access channels.

What makes JUPITER stand out is not just its scalability, but its “AI at Scale” strategy focusing on sustainability: a sophisticated cooling system and renewable energy usage enable an energy-efficient supercomputing infrastructure that could set new benchmarks. For Europe, this project is a strong signal in the global race for technological sovereignty in the AI era – and for NVIDIA, it proves its pivotal role in the future AI economy.

- AI supercomputing with over 90 ExaFLOPS

- Research in medicine, climate, and robotics

- European flagship project for AI

SOURCE: NVIDIA NEWSROOM

JUPITER marks Europe’s entry into the ExaFLOP era of artificial intelligence.

Image source: NVIDIA

Snapdragon AR1+ – AI glasses without a smartphone

In calendar week 24, Qualcomm made headlines with the official launch of its new Snapdragon AR1+ Gen 1 chip, developed specifically for compact, standalone AI glasses. Unlike previous AR platforms, this solution requires no connected smartphone – all computing, sensor fusion, and AI models run directly on the chip within the wearable.

The AR1+ is designed for ultra-light glasses that are always ready: whether for navigation, AI-powered assistance, visual search, or contextual translation – the glasses understand their surroundings, analyze content in real time, and only display information when it’s relevant. The strong on-device AI not only ensures lower latency but also improves privacy since data is processed locally.

Partners such as Ray-Ban Meta, OPPO, and Xiaomi are reportedly already working on products using the AR1+. What’s especially exciting: the platform is open to third-party developers, potentially fostering a new ecosystem around AI-driven augmented reality experiences. With the AR1+, Qualcomm marks a shift – away from smartphones as AR hosts and toward true spatial intelligence worn on the head.

- Autonomous AR experience with AI integration

- Full spatial computing features

- Optimized for everyday use and interaction

SOURCE: Qualcomm News

The new AR1+ brings AI directly to your eyes – no smartphone or tethering needed.

Image source: Qualcomm

CGT Europe Summit – The Fourth Industrial Revolution kicks off in Paris

In the heart of Paris, the next phase of industrial transformation was launched: the CGT Europe Summit gathered key figures from industry, research, AI, and automation in week 24 to shape a new era – defined by cognitive factories, digital twins, edge AI, and immersive human-machine interaction.

At the core of the conference were real-world projects already deploying AI, XR, and robotics in industrial environments – at companies like Renault, Schneider Electric, and Bosch Rexroth. The combination of data intelligence, 3D simulation, and autonomous control systems shows that Europe’s industry is pushing transformation and beginning to establish its own platforms and standards.

One widely discussed concept was the “Cognitive Green Twin” – a new approach centered on energy efficiency, sustainability, and real-time optimization in smart production systems. The summit made it clear: the Fourth Industrial Revolution is underway – not in the distant future, but now – with Europe as a driving force.

- Global commitment to industrial transformation

- Focus on AI, robotics, and XR

- Paris as Europe’s tech future hub

SOURCE: Ulrich Buckenlei (Digital Analyst)

From Paris to the world: The Fourth Industrial Revolution is gaining momentum.

Image source: CGT Europe

Niantic Spatial @ AWE USA

At AWE USA, Niantic unveiled a visionary project that redefines the intersection of geodata, AR, and social networks: Niantic Spatial links real-world locations with augmented information – enriched by Instagram data, visual markers, and AI-generated interactions. The result: a playful, deeper perception of the physical world – driven by context, creativity, and community.

The system uses geo-AI to generate content based on location, time of day, or social context. In the demo, visitors navigated through real cities, collected clues, unlocked content, and engaged with friends or collective challenges. The boundaries between game, social app, and navigation completely dissolve.

What’s emerging here is more than just “Pokémon Go 2.0”: it’s the blueprint of a new reality layer where public spaces become personalized, interactive experiences. Niantic is giving us a glimpse into the future role of AR in urban life – local, social, and dynamic.

- Geo-AI meets social networks

- Augmentation of real places via Instagram data

- Gamified navigation through real cities

SOURCE: awexr.com

Niantic presents new AR demo powered by Instagram and Geo-AI.

Image source: Niantic Labs

ElevenLabs Eleven V3 Alpha

In calendar week 24, ElevenLabs made waves with the release of Eleven V3 – its most powerful multilingual voice model to date. The new version not only delivers much more realistic voices in over 30 languages, but also supports expressive dynamics like emotion, emphasis, and pauses – a significant leap from previous models. Particularly in storytelling, film production, learning platforms, and games, the update is well received, also enabling personalized voice design.

Two features stand at the core of this update: first, the ability to convincingly convey complex emotions like irony, nervousness, or excitement – and second, a new API that allows developers to fine-tune voices via code. Feedback from the creator community shows: many see this as more than a content creation tool – it’s a new level of human-machine interaction, especially for accessible applications and AI avatars.

Compared to competitors like Play.ht or Microsoft Azure Voice, Eleven V3 stands out for its naturalness, speed, and adaptability. Especially noteworthy: voices now sound less generic and can exhibit distinct personality traits – whether formal, creative, or theatrical. This makes ElevenLabs one of the key players in the rapidly growing field of generative voice AI.

- Emotion-responsive Text-to-Speech (TTS)

- Multilingual & realistic

- For games, education, AI agents

SOURCE: ElevenLabs Blog

New TTS voices respond to emotion in real time.

Image source: ElevenLabs

Google Veo 3 – Speed Boost Update

Google has unveiled an impressive upgrade of its AI-powered video generator with Veo 3. The new model not only delivers better visual quality, but especially more speed: the generation of video sequences is significantly faster, smoother, and more consistent. This becomes particularly evident with longer clips or more complex scenes – prompt-to-video at a speed that boosts creative output like never before.

In addition to improved speed, Veo 3 introduces dynamic scene direction, where camera movements, perspective shifts, and image depth are realistically simulated. The integration of motion logic, environmental details, and narrative tension marks a quantum leap over earlier versions.

Google plans to make Veo available not only for research but also for YouTube Shorts and creator platforms. What started as a prototype is becoming a mass tool for creative AI production – with great potential for ads, content creation, educational films, and storytelling in tomorrow’s media landscape.

- Faster text-to-video rendering

- New control features like perspective switching

- Stronger AI for smooth motion

SOURCE: Google Blog

Google Veo 3 boosts speed and control in AI video.

Image source: Google

EU XM-Master – XR robotics for Industry 5.0

With the EU XM-Master project, Europe is placing the spotlight on the connection between Extended Reality (XR) and robotics training as part of its industrial initiative. The goal is to rethink qualification for highly complex production systems – particularly where conventional training reaches its limits: real-time data, spatial cognition, and physical interaction.

Training takes place in immersive simulation environments that precisely replicate real industrial facilities. Learners operate robotic arms, machines, and maintenance systems with natural hand movements, analyze AI-supported sensor data in mixed reality overlays, and learn through trial and error – without risk, without material waste, and with maximum learning effect.

The project is backed by renowned partners such as Fraunhofer, CEA, and TNO, supported by the European Commission. The focus is on sustainable transformation, inclusive training, and industry-near research. XM-Master thus becomes a flagship for Industry 5.0 – where human-centered AI, automation, and XR training converge.

- Training platform with XR & real robotic systems

- Early prototypes for Industry 5.0

- Supports AI co-agents & digital twins

SOURCE: CORDIS.europa.eu

EU supports XR-based robotics training for industrial environments.

Image source: EU Project Platform

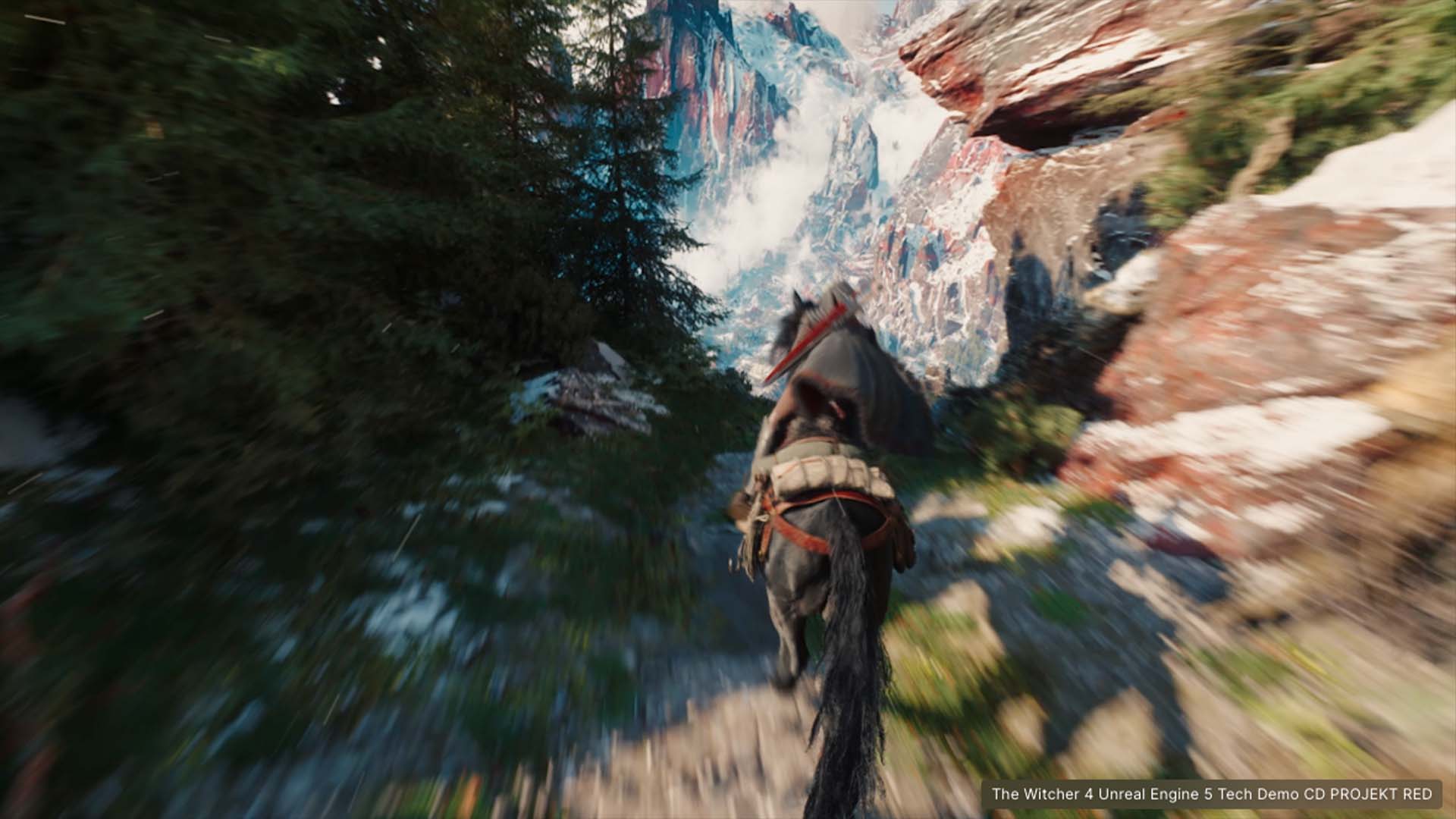

Unreal Engine 5.6 Bigger Worlds, Faster Renders

With the release of Unreal Engine 5.6, Epic Games takes its real-time engine to the next level – bringing film, gaming, and industrial production even closer together. The new version stands out with two major upgrades: bigger worlds and significantly faster render times.

Key technologies include World Partition Streaming, which loads massive 3D environments in seamless sections, and the revamped Nanite Geometry System, rendering billions of polygons in real time – now also supporting dynamic geometry and material changes. Combined with Lumen Global Illumination, UE 5.6 achieves new levels of lighting, depth, and physical realism.

For developers, architects, and filmmakers, this means more realistic scenes, shorter production cycles, and, for the first time, real simulated physics for vegetation and crowd behavior. Unreal 5.6 becomes the go-to platform for immersive content creation – from smart factories to virtual sets.

- MetaHuman update & realistic facial rigging

- Level streaming for massive game worlds

- New tools for real-time animation & design

SOURCE: Unreal Engine Blog

Unreal Engine 5.6 with MetaHuman boost in Witcher 4 demo.

Image source: Epic Games

BONUS – Youth Study: XR becomes a key technology

A new youth study from Germany reveals: for Gen Z, XR is far from a gimmick – it is increasingly seen as a core future technology. Over 70% of respondents aged 14 to 24 said they could imagine using XR applications regularly in everyday life, education, or work.

Notably: while classic social media platforms like Instagram and TikTok remain in use, interest in immersive environments – where avatars, AI, and interaction merge – is growing. In the study, XR is explicitly described as “more relevant than classic computer interfaces,” with potential for communication, shopping, creativity, and learning.

The study, conducted by an interdisciplinary team of education and media researchers, recommends promoting XR skills in schools. Thus, XR becomes not only the flagship technology of a new tech generation but also a societal educational mandate.

- 63% of teens rely on immersive technologies

- XR & AI lead ahead of gaming and social media

- Tech visions influence career & study choices

SOURCE: XRA.ORG

63% of young people show strong interest in immersive technology.

Image source: EDU Insights

Seize the opportunity now!

The Visoric team supports companies in implementing innovative technologies – from immersive real-time applications to AI-powered assistance systems.

Our services include:

- Strategy consulting and technology transfer → Realizing the potential of XR, AI, and digital twins.

- Custom solution development → From prototypes to production-ready systems.

- Training and enablement → We prepare your teams for the next technology generation.

Let’s get started together – contact the Visoric team today and shape the transformation actively!

Contact Us:

Email: info@xrstager.com

Phone: +49 89 21552678

Contact Persons:

Ulrich Buckenlei (Creative Director)

Mobil +49 152 53532871

Mail: ulrich.buckenlei@xrstager.com

Nataliya Daniltseva (Projekt Manager)

Mobil + 49 176 72805705

Mail: nataliya.daniltseva@xrstager.com

Address:

VISORIC GmbH

Bayerstraße 13

D-80335 Munich