The User Interface in Marey (Visualization)

Visualization: Ulrich Buckenlei

Marey AI Film Generation – A New Era of Automated 3D Production

Artificial intelligence is transforming 3D film production. With Marey, developed by the California-based AI studio Moonvalley, cinematic sequences are generated automatically – based on text or image input. The software combines advanced language processing, computer-generated animation, and multimodal scene synthesis into a radically new production approach.

Moonvalley was founded in 2021 by an interdisciplinary team of AI researchers, game developers, and film technologists. Since then, the company has focused on generative media solutions for professional creative industries. Marey was first introduced in spring 2023 and has been available in a beta version for selected studios, agencies, and research partners since mid-2024.

Marey is considered one of the first fully integrated AI platforms for automated film scenes. Instead of a traditional storyboard or animation workflow, directing, cinematography, movement, lighting, and sound design are created directly from structured prompts – and in high visual quality.

Access is currently highly limited: interested organizations must register via the Moonvalley website and are invited after a project review. The target group includes professional creative teams, media-focused research institutions, and studios with an emphasis on AI-generated storytelling. A public version for end users is not currently planned.

In the Visoric Newsroom, we analyze this development and highlight specific opportunities and challenges for media companies, agencies, and 3D production studios.

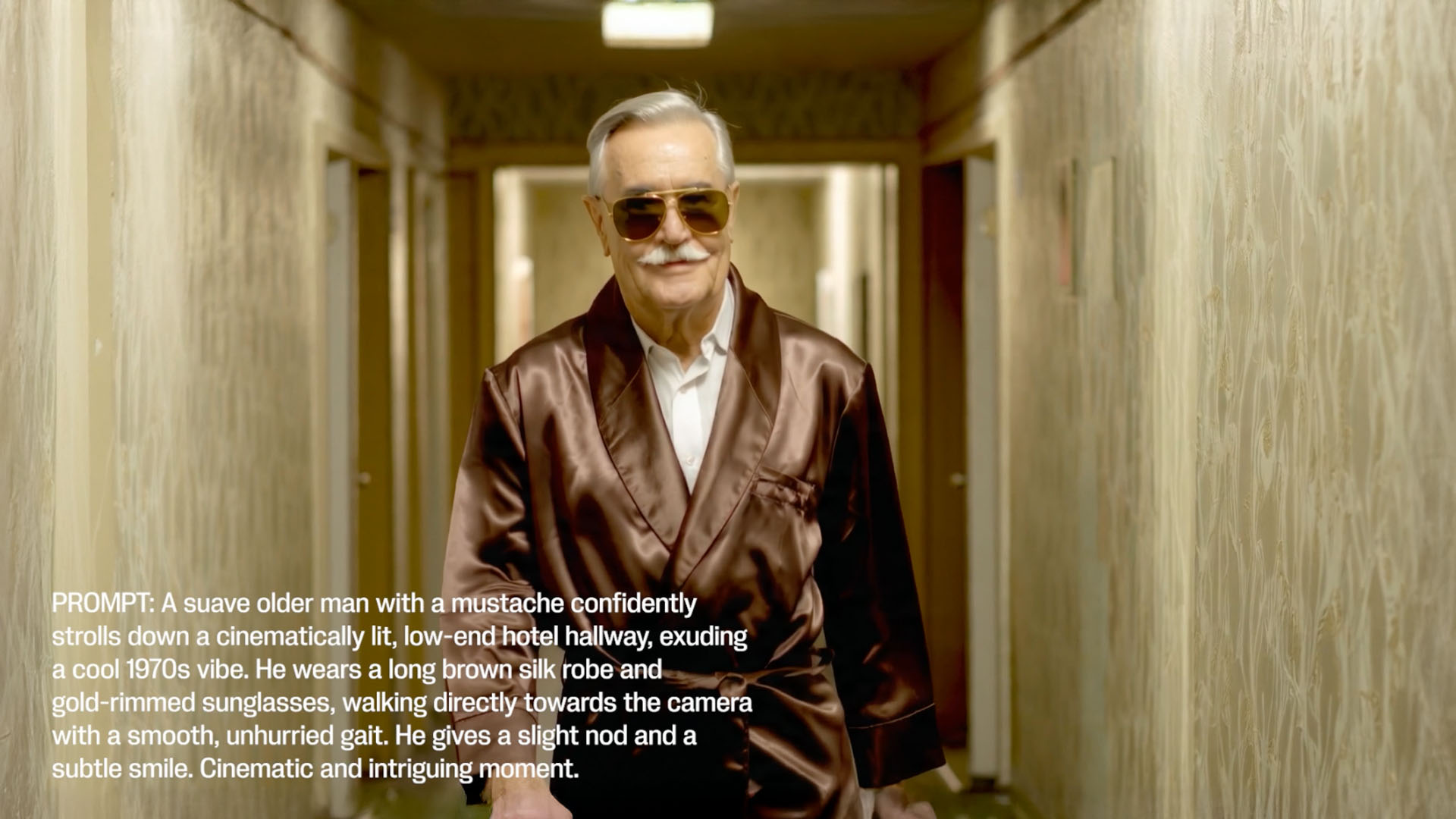

Sample film scene created with the Marey AI software by US developer Moonvalley

[Image: Moonvalley]

Concept: Text and Image Prompts as Directing Instructions

Marey is based on the idea of no longer staging cinematic scenes manually but generating them entirely from textual or visual input. The software interprets prompts as directing instructions and creates dynamic sequences including editing, camera movement, motion design, lighting, and sound – all in a consistent, cinematic style.

The key lies in semantic translation: Marey not only analyzes what should be shown but also how it should be cinematically staged. Emotional transitions, camera axes, and motion logic are automatically recognized and dramaturgically linked.

- Prompt-to-Sequence – Complete film scenes are created from a few text elements

- Semantic Staging – Content and cinematic implementation are logically intertwined

- Multimodal Output – Visual, acoustic, and dynamic levels are integrated

Complete film scenes emerge from just a few text elements

[Image: Moonvalley]

With Marey, the way stories are cinematically implemented changes: instead of painstakingly animating or filming scenes, creatives simply input text prompts – the software takes over direction, camera, movement, and sound design. Imagination replaces traditional production tools. Marey interprets these prompts with cinematic logic and generates coherent, dynamic sequences. The creative focus thus shifts from manual execution to conceptual direction: it’s not about creating individual images manually, but about articulating ideas that the system visualizes autonomously. For studios and agencies, this means a radical rethink – but also enormous potential for speed, diversity, and artistic precision.

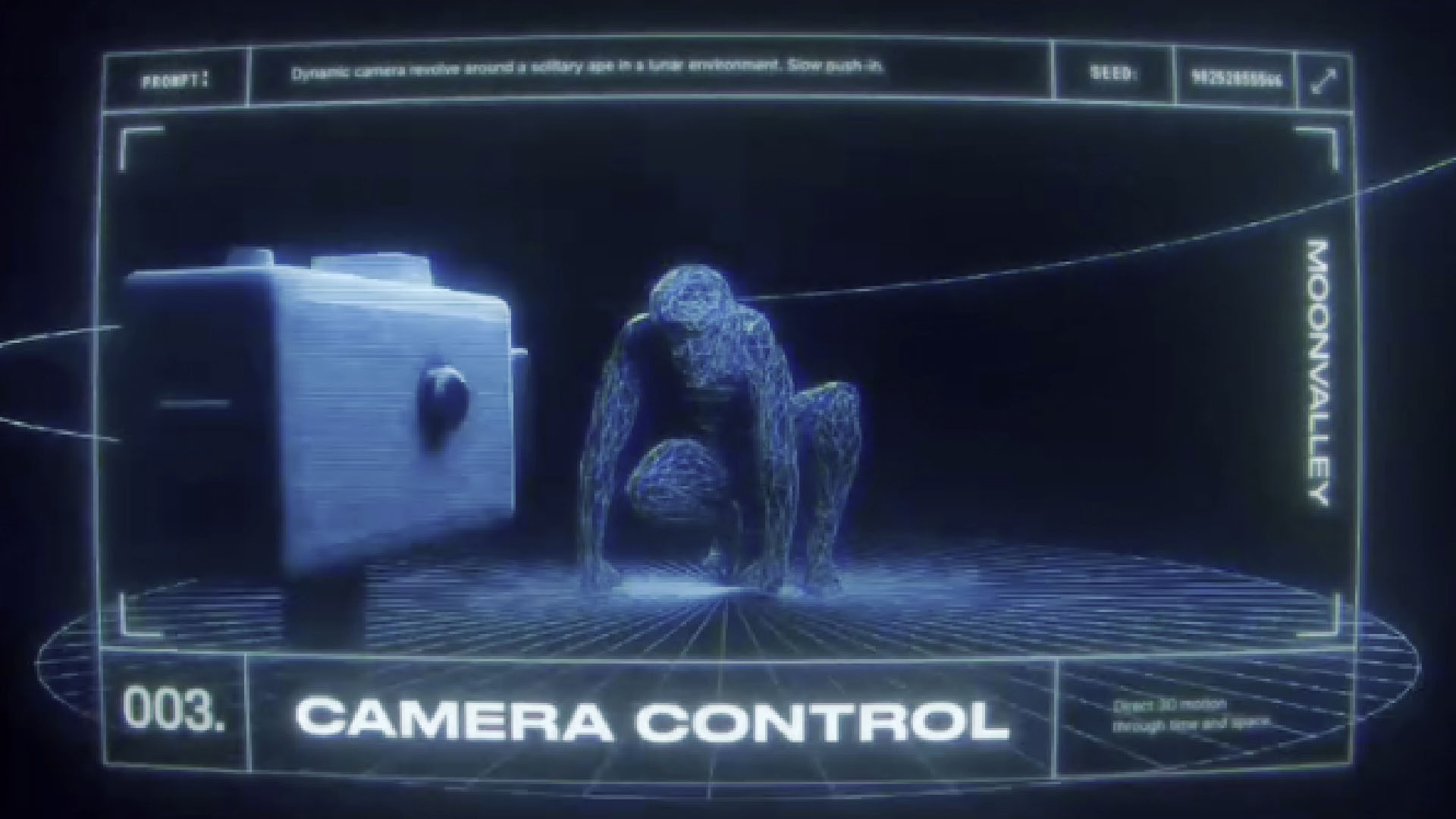

Technical Workflow and Architecture

Marey is based on a modular, AI-driven production system that transforms text- or image-based input into cinematic motion sequences. The technical architecture integrates different AI engines for story analysis, camera directing, lighting, sound design, and film editing into a consistent output system. Incoming prompts are translated into semantic film components, including shot sizes, camera movements, perspective changes, or spatial interactions.

A key feature is the ability to define object paths directly: this allows motion paths to be drawn that characters or vehicles follow precisely – including physical parameters such as acceleration, weight, and timing. These paths can be adjusted in real time, allowing creative changes to layout, choreography, or timing to be incorporated directly into the AI model. Layers like style, camera direction, or motion frequency can also be varied and combined independently.

Despite automation, the system allows a high degree of customization. Asset libraries, user-defined style guides, or look-up tables (LUTs) can reflect specific brand worlds. The result is not generic output but a curated cinematic experience aligned with the visual identity of each creative team.

- Strengths – High production speed, creative freedom, visual diversity

- Limitations – Inconsistent character portrayal, repetitive patterns, lack of long-term coherence

- Requests – Finer control of style parameters, more stable scene logic, improved feedback system

Movements follow defined paths with physical dynamics

[Image: Moonvalley]

This technical architecture enables intelligent control of cinematic expression without requiring users to have classical 3D or film editing expertise. Especially for agencies, prototyping teams, and AI creators, the system opens up a new production logic between automation and stylistic control.

User Experiences and Challenges

Initial feedback from professional users shows a clear picture: Marey significantly accelerates creative workflows and shifts the focus from technical implementation to conceptual design. The ability to generate full scene variants in a short time leads to an agile storytelling approach – especially in early concept phases or when developing alternative visualizations.

At the same time, users report typical limitations of generative systems. Stylistic consistency over longer periods can vary, especially with recurring characters or locations. Detailed control over facial expressions, timing, or specific camera positions is also limited – requiring well-structured and thoughtful prompts. Prompt literacy is becoming increasingly important: only those who formulate clearly and creatively can guide the system toward consistent results.

These experiences actively feed into the ongoing development of the platform. The Marey community wishes for more control over timing, transitions, and character coherence – as well as tools for personalized styles or modular scene development.

- Strengths – High production speed, creative freedom, visual diversity

- Limitations – Inconsistent character portrayal, repetitive patterns, lack of long-term coherence

- Requests – Finer control of style parameters, more stable scene logic, improved feedback system

High production speed, creative freedom, visual diversity

[Image: Moonvalley]

Practice shows: Marey is not a replacement for artistic vision – but a powerful tool to translate ideas into high-quality, high-speed results. The challenge lies in the intelligent coordination between human and machine.

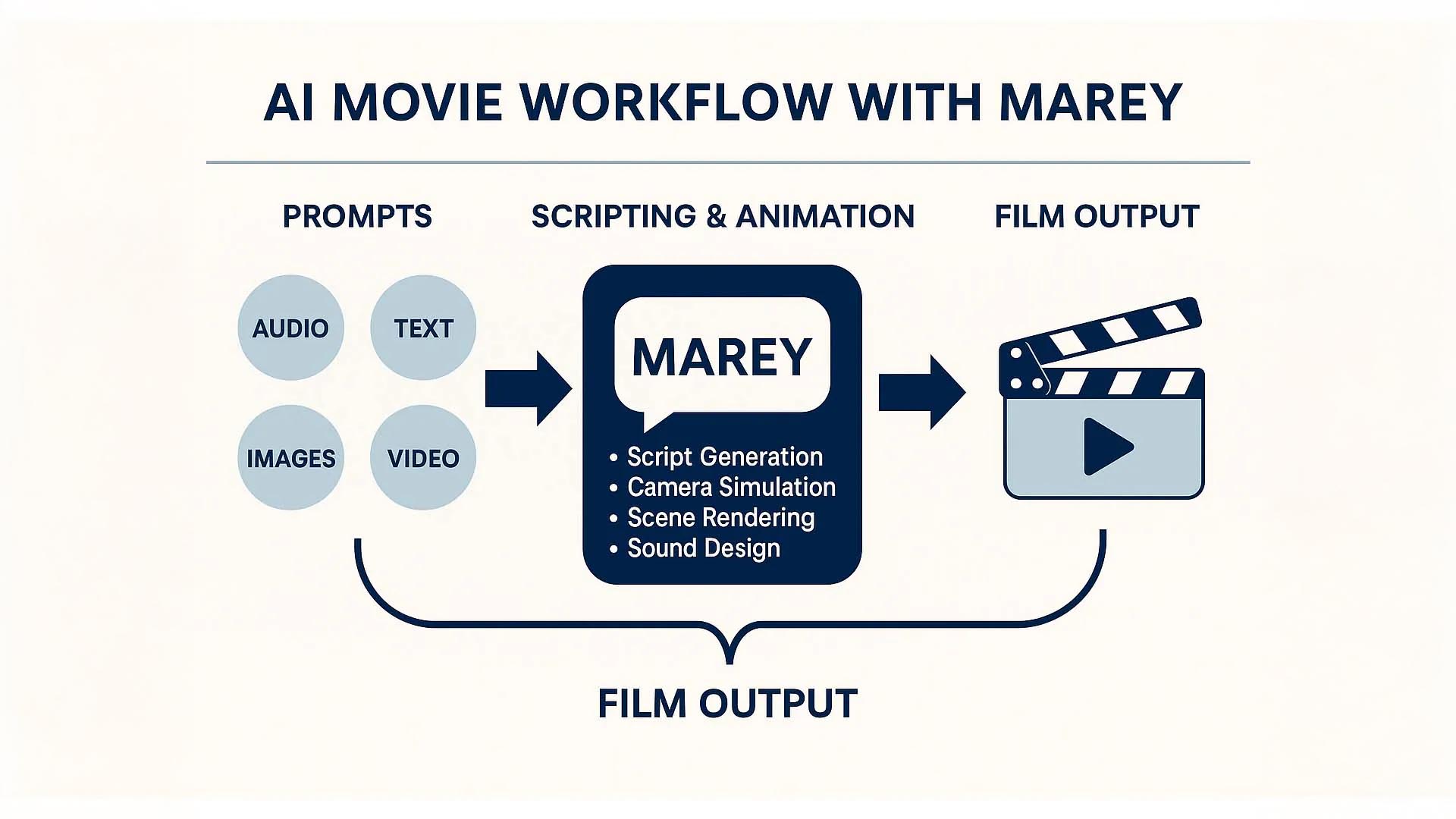

Infographic: Marey Workflow Overview

The visualization provides a structured overview of Marey’s modular software architecture and its end-to-end process – from initial input to finished film scene. At the center is the transformation of natural language input (text prompts) into visually and dramaturgically coherent scenes with camera, movement, lighting, and sound.

The workflow starts with the **semantic analysis of the prompt**: the AI breaks the input into action units, defines spatial references, recognizes actor logic, and translates this into cinematic structures like shot sizes, perspectives, and tension arcs.

Next is the **shot design module**, which prepares camera axes, image composition, and transitions. In parallel, **motion path systems** are activated – users can influence motion via textual means (“Character walks slowly toward the door”) or by visually defining paths. These serve as the basis for the motion engine, which produces realistic motion dynamics with physical plausibility.

Subsequent steps apply **visual layers such as lighting mood, environmental textures, and style parameters**, guided by pre-defined style guides or themes derived from the prompt. A **sound module** is also integrated: based on the scene and its dramatic progression, music, ambient sounds, and dialogue are generated or placed synthetically.

Infographic: Marey Workflow Overview

Visualization: Ulrich Buckenlei

Finally, the **composition and output** of the film scene occurs: all components – visual frames, audio tracks, timing information – are merged into a unified, seamless result, provided as a preview or high-quality export.

The infographic also clearly shows where users can exert creative control:

- Prompt Layer – Influence through precise formulation and structuring of input text

- Parameter Control – Fine-tuning of style, pacing, camera direction, and emotion profiles

- Path Control – Manual or text-based definition of movement paths in space

This modular structure enables a compelling interplay between automation and personalization. Marey handles operational film techniques – while creative vision remains with the user. The infographic makes it clear: those who understand the software’s language can create impressively precise scenes with just a few words.

Example Video: Cinematic Generation with Marey

AI-generated video via Marey, narrated by Ulrich Buckenlei

The video shown impressively demonstrates how visual coherence, sound integration, and editing pace are automatically generated by Marey. The result: a 90-second mini-film with continuous narrative tension.

Marey thus demonstrates how far prompt-based film technology has already come – and what new forms of expression may soon become reality.

Talk to Our Experts About Your Project

Are you working with AI-generated video technology, developing immersive content formats, or experimenting with prompt-based production methods? Then talk to our team of experts. Together, we will identify concrete use cases, design options, and technological synergies for your organization.

Our experts in AI, XR, and 3D will help you strategically evaluate and practically leverage the potential of tools like Marey.

- Strategy Workshops – Understand the future potential of AI-generated content

- Proof-of-Concept – Fast-track entry into real AI film projects

- Technology Consulting – Selection, training, and integration of the right tools

Get in touch now – and shape the next chapter of creative production with us.

Contact Us:

Email: info@xrstager.com

Phone: +49 89 21552678

Contact Persons:

Ulrich Buckenlei (Creative Director)

Mobil +49 152 53532871

Mail: ulrich.buckenlei@xrstager.com

Nataliya Daniltseva (Projekt Manager)

Mobil + 49 176 72805705

Mail: nataliya.daniltseva@xrstager.com

Address:

VISORIC GmbH

Bayerstraße 13

D-80335 Munich