AI-powered basketball analysis without sensors

Image: © Code in a Jiffy | Visuals enhanced by Ulrich Buckenlei – Visoric GmbH

Links & Resources

- Github Repo: https://github.com/abd…

- Basketball Detection Dataset: https://universe.roboflow.com/…

- Zero Shot Classifier: https://huggingface.co/…

- Basketball Court Keypoint Dataset: https://universe.roboflow.com/…

- YouTube Video: Build an AI/ML Basketball Analysis system

What if you could analyze a game – without sensors?

Imagine tracking basketball players, analyzing ball possession, calculating team stats and player speed – all without a single chip or sensor. This AI/ML project shows how it’s done: with video and vision models only.

Originally created by Code In a Jiffy, this open-source framework uses YOLO, OpenCV, and Python to detect players, balls, team identities, passes, keypoints, and distances – in real-time. A showcase of what’s possible with pure AI.

Real-time YOLO detection of players and refs on the court

Image: © Code in a Jiffy | Visuals enhanced by Ulrich Buckenlei – Visoric GmbH

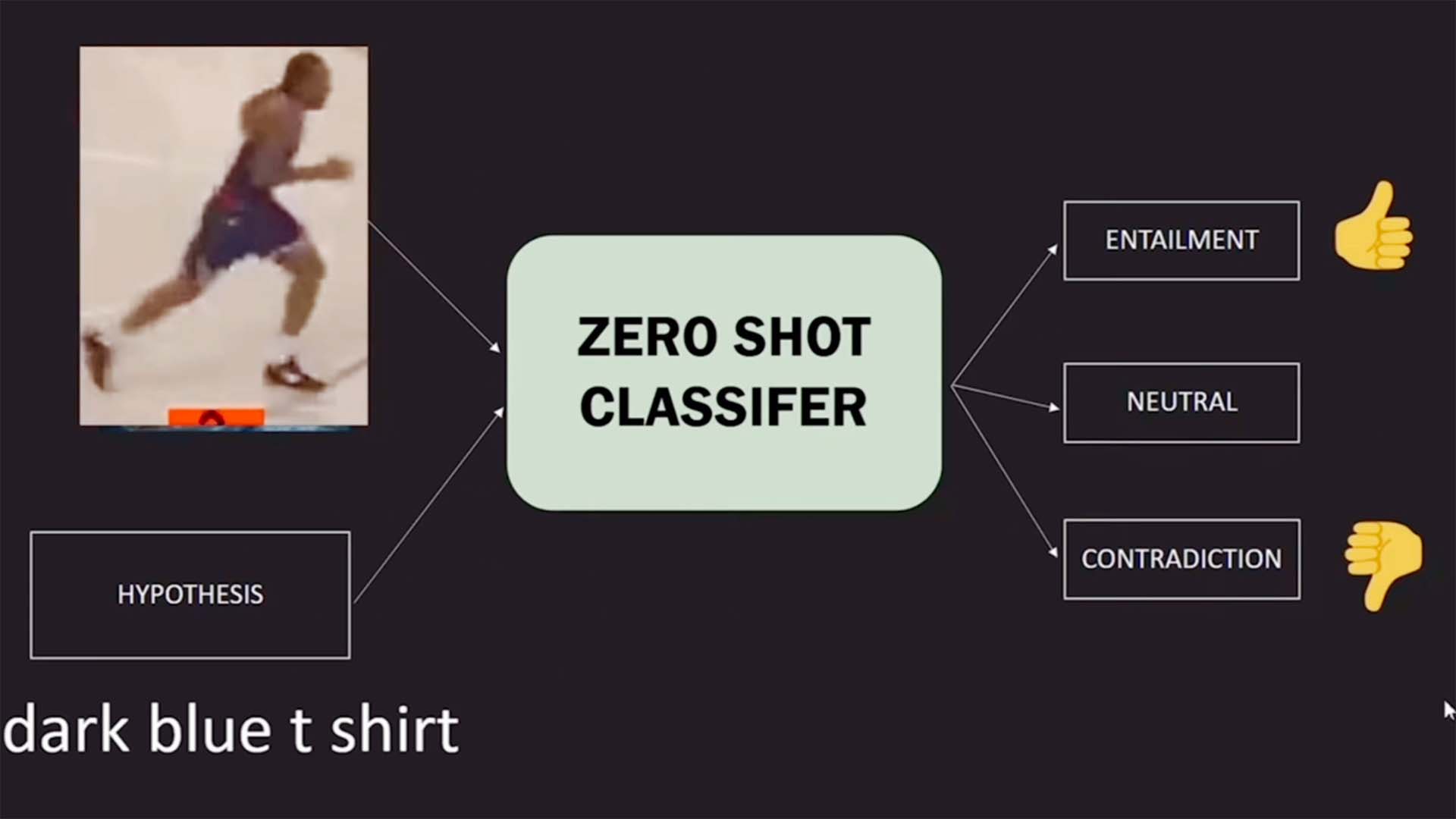

Zero-shot classification: How AI sees jersey colors

To distinguish teams, a zero-shot image classifier was used. Instead of training the model on every jersey combination, prompts like “dark blue t-shirt” guide the AI to assign team colors dynamically.

This approach adds incredible flexibility. New uniforms? No problem. The classifier adapts based on description, powered by HuggingFace’s NLI models – reducing prep time and improving scale.

Team detection using zero-shot image classification

Image: © Code in a Jiffy | Visuals enhanced by Ulrich Buckenlei – Visoric GmbH

Understanding the court: Keypoints and layout

What happens where? To answer this, the system learns the layout of the court via keypoint detection. A custom-trained model recognizes baskets, corners and zones from any angle.

This allows all actions to be placed accurately in space. Combined with perspective correction, the data becomes fully spatialized – a crucial step to build tactical views and performance metrics.

Landmark-based detection of court positions and orientation

Image: © Code in a Jiffy | Visuals enhanced by Ulrich Buckenlei – Visoric GmbH

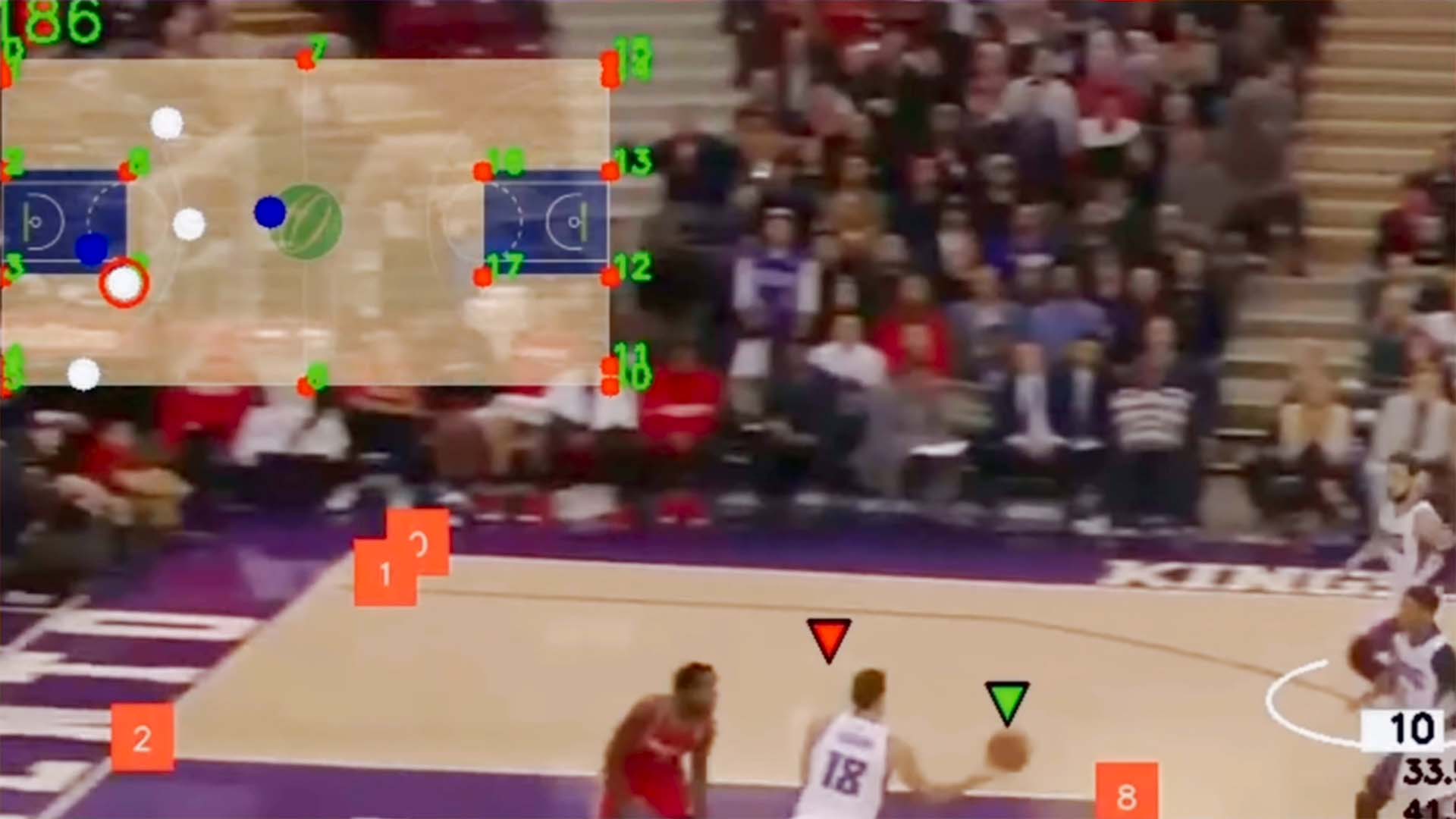

From video to top-down tactical map

Once court layout is known, the camera view is transformed. Using perspective mapping, the visual field is flattened into a bird’s-eye view – unlocking powerful analytics.

Now you can see passes, player movement, team formations and defense gaps – just like in pro-level dashboards. But this time, it’s AI-generated from video only.

Perspective transformation for tactical game analysis

Image: © Code in a Jiffy | Visuals enhanced by Ulrich Buckenlei – Visoric GmbH

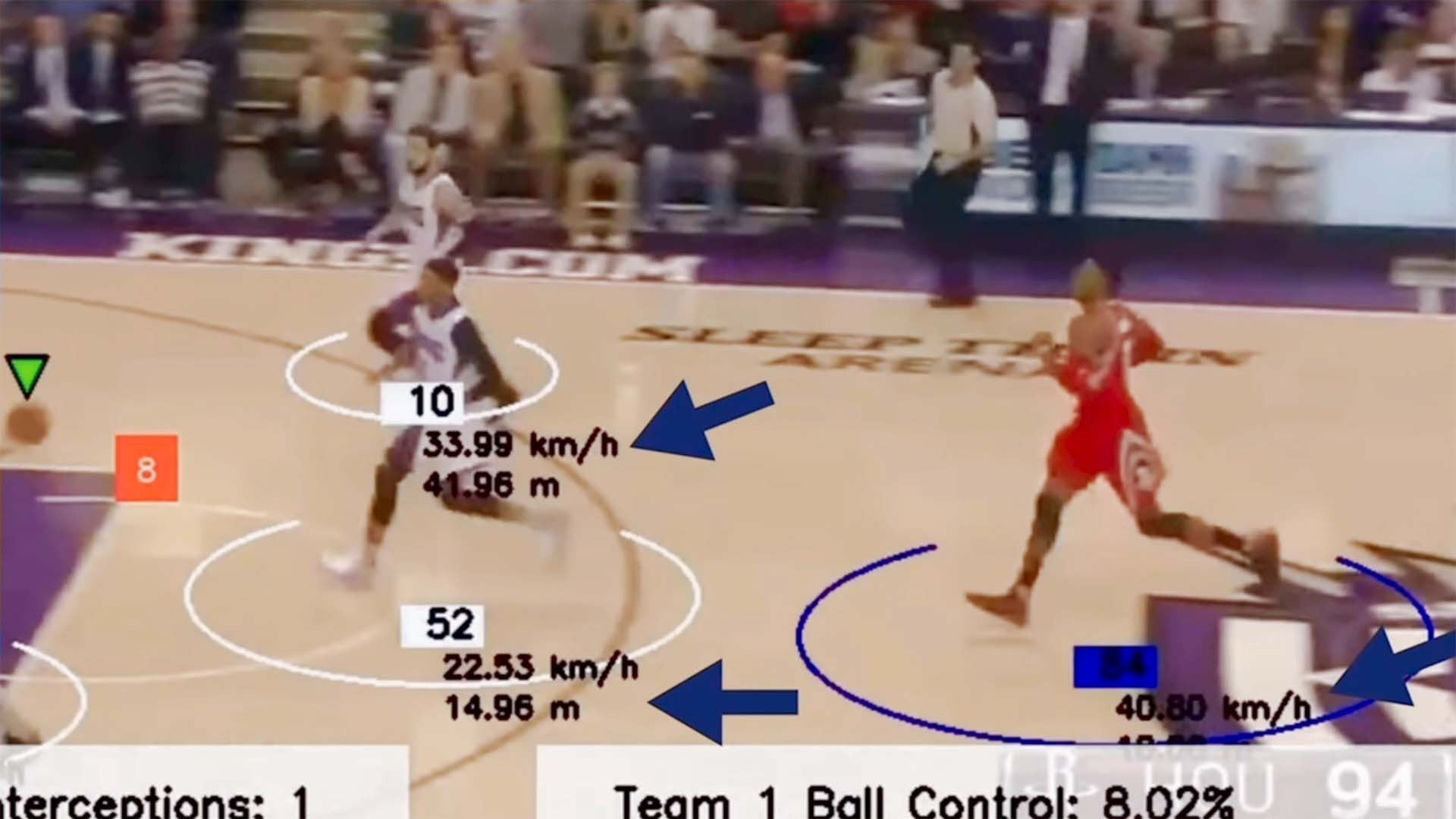

Speed, distance, performance: In meters, not pixels

How fast is each player? How far did they run? The system calculates real-world values by mapping pixel movement to court coordinates – enabled by AI and geometry.

This means coaches, analysts and fans can access pro-level stats without any wearables – just by analyzing the footage with the right models and vision logic.

Movement analysis using real-world coordinates

Image: © Code in a Jiffy | Visuals enhanced by Ulrich Buckenlei – Visoric GmbH

Video: AI-powered Basketball Breakdown

Watch the full breakdown in motion – from raw footage to tactical dashboard. The video walks you through every step of the project, showing results in real time.

If you’re building sports AI, or looking to replace sensors with vision: this is your reference project. Compact, elegant, open-source.

Full AI workflow from court video to tactical stats

Video: © Code in a Jiffy | Editing: Ulrich Buckenlei – Visoric GmbH

Build your own AI vision system

This project is open for experimentation – and a perfect base for sensor-free tracking in any domain. Whether you’re analyzing retail flows, sports or logistics – vision AI unlocks real-world insights.

Visoric supports teams and enterprises in developing custom AI-driven analysis systems: from concept to deployment. If this inspired you, get in touch.

- AI Vision Prototyping: Custom YOLO & OpenCV pipelines

- Sensor-Free Tracking: Real-world detection from video only

- Deployment Support: For sports, retail, industry and beyond

Let’s build something visionary. Together.

Contact Us:

Email: info@xrstager.com

Phone: +49 89 21552678

Contact Persons:

Ulrich Buckenlei (Creative Director)

Mobil +49 152 53532871

Mail: ulrich.buckenlei@xrstager.com

Nataliya Daniltseva (Projekt Manager)

Mobil + 49 176 72805705

Mail: nataliya.daniltseva@xrstager.com

Address:

VISORIC GmbH

Bayerstraße 13

D-80335 Munich