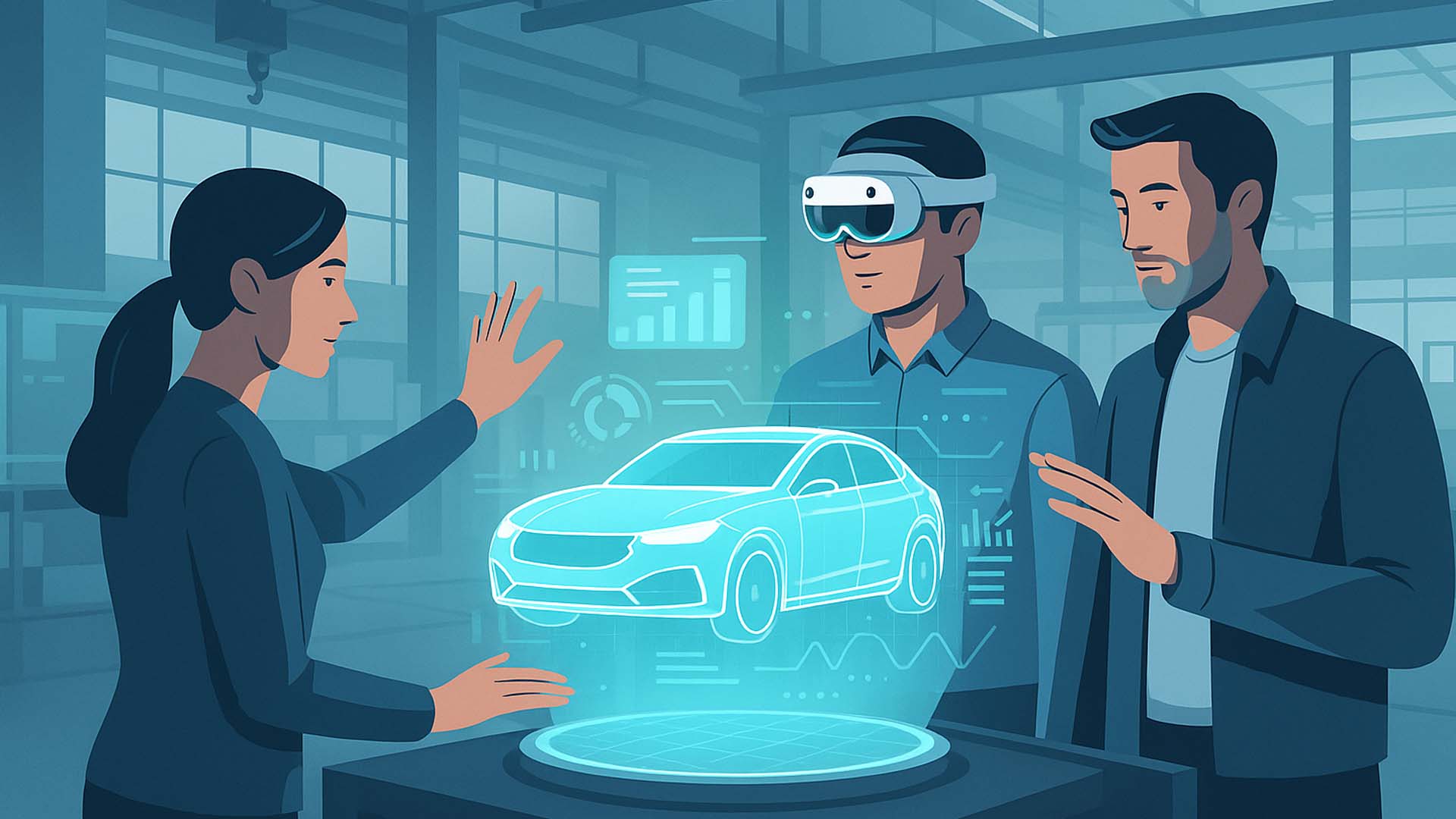

Image: From leisure to manufacturing – gaming technologies are transforming work processes. Visualization: Visoric GmbH 2025

From Play to the Workplace

Technologies that once brought excitement to living rooms are now measurably transforming the world of work. Motion pads, VR headsets, hand and body tracking, as well as spatial audio and lighting systems are leaving the realm of game consoles to become tools for manufacturing, training, and service. The key lies not in entertainment but in precision: what reliably captures movement, builds scenes in real time, and delivers millisecond feedback in games is ideal for safely practicing real-world tasks, understanding complex processes, and coordinating teamwork across distances.

This creates a new mode of learning and working for companies. Employees no longer experience processes as abstract diagrams or lengthy manuals, but as walkable, responsive environments. Instead of memorizing procedures, skills are developed through action: see, grasp, correct, repeat. The same sensors that trigger rewards in games detect errors in training environments, provide assistance, and document progress.

This transfer succeeds because gaming hardware is optimized for robust, repeatable, and low-latency interaction. Engines that render billions of pixels reliably for entertainment visualize industrial data streams, safety zones, or quality metrics. At the same time, spatial audio makes situations more intuitive: warnings come from the direction of danger. This reduces cognitive load and increases training effectiveness.

- Movement becomes information: walking, grasping, and pointing control training and assessment

- Real-time feedback replaces trial and error in production with safe digital practice

- Spatial visualization makes processes, risks, and quality directly understandable

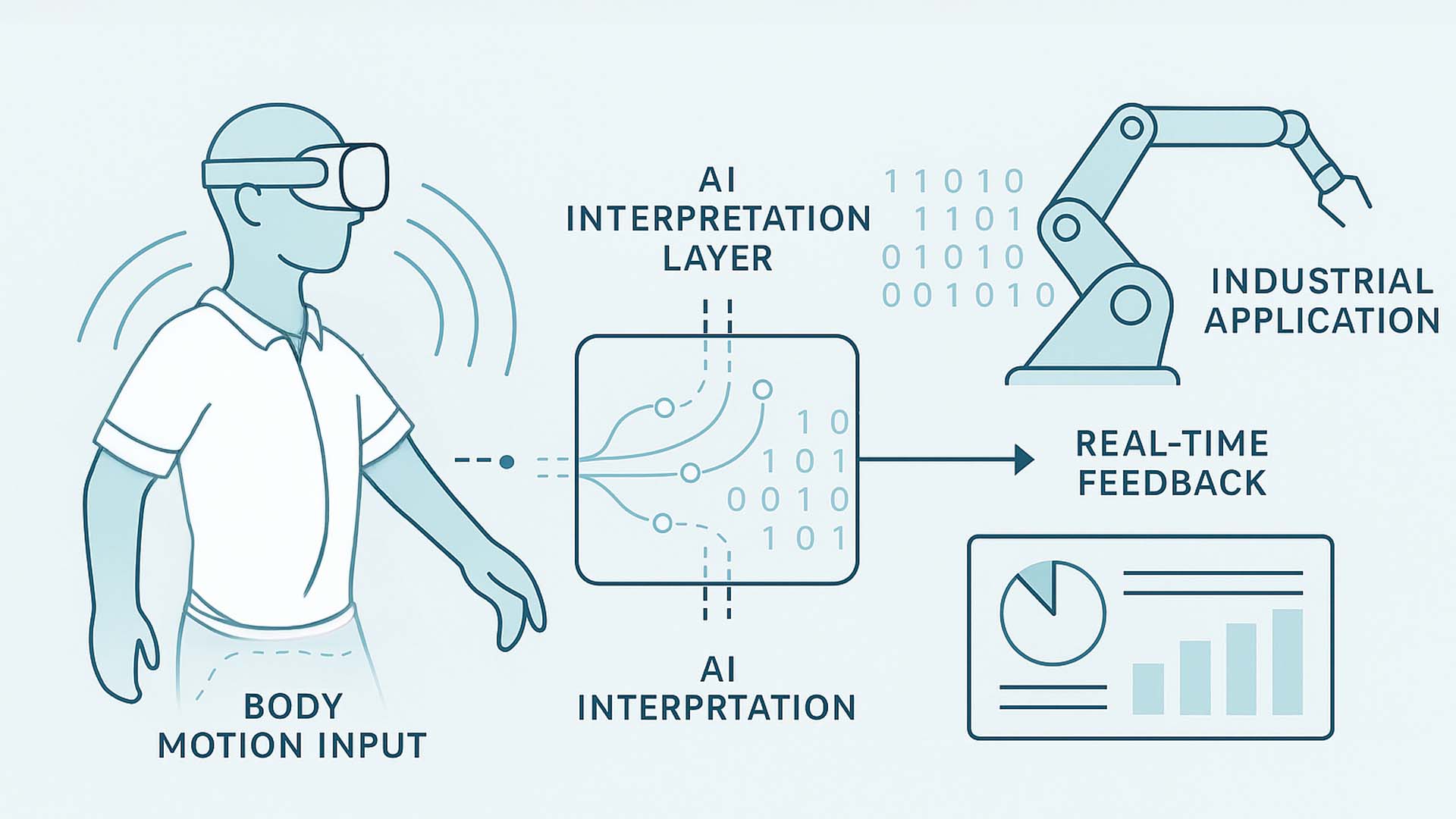

Image: From gaming to industry – technologies from gaming become tools for training, service, and manufacturing. Visualization: Visoric GmbH 2025

The result: companies shorten onboarding times, standardize quality, and can safely simulate rare or critical situations. Instead of blocking real equipment, scenarios run in parallel within digital twins. Teams learn to detect errors early, hand over processes cleanly, and work in sync with machines. This step is not a replacement for reality but a preparation for it – so precise that the transition into everyday work feels seamless.

This is where the next chapter begins: we show how movement becomes control – and why natural actions are easier to remember than pressing buttons or navigating menus.

When Movement Becomes Control

Our movements are becoming the language of machines. What once required switches, touchscreens, or controllers now happens intuitively through posture, gestures, and spatial position. Systems recognize direction, speed, and intent to derive commands. Action itself becomes the interface – more precise than any button, because it directly expresses the goal.

This logic is already being tested in modern workplaces. Technicians control robots with arm movements, warehouse workers guide autonomous vehicles through simple gestures, and in training labs, machine control is simulated on motion pads that digitally map every step. Humans remain in control, but technology understands them better than they expect. Movement becomes input, presence becomes signal.

This evolution transforms not only operation but also the ergonomics of work. Systems adapt to users – not the other way around. Incorrect posture or uncertain actions are detected before they cause errors. AI compares motion patterns with optimal sequences and provides preventive feedback. Machines thus become partners rather than mere tools.

- Gestures, gaze direction, and posture replace traditional input devices

- Adaptive systems recognize intentions and react contextually

- Movement becomes the universal language between human and machine

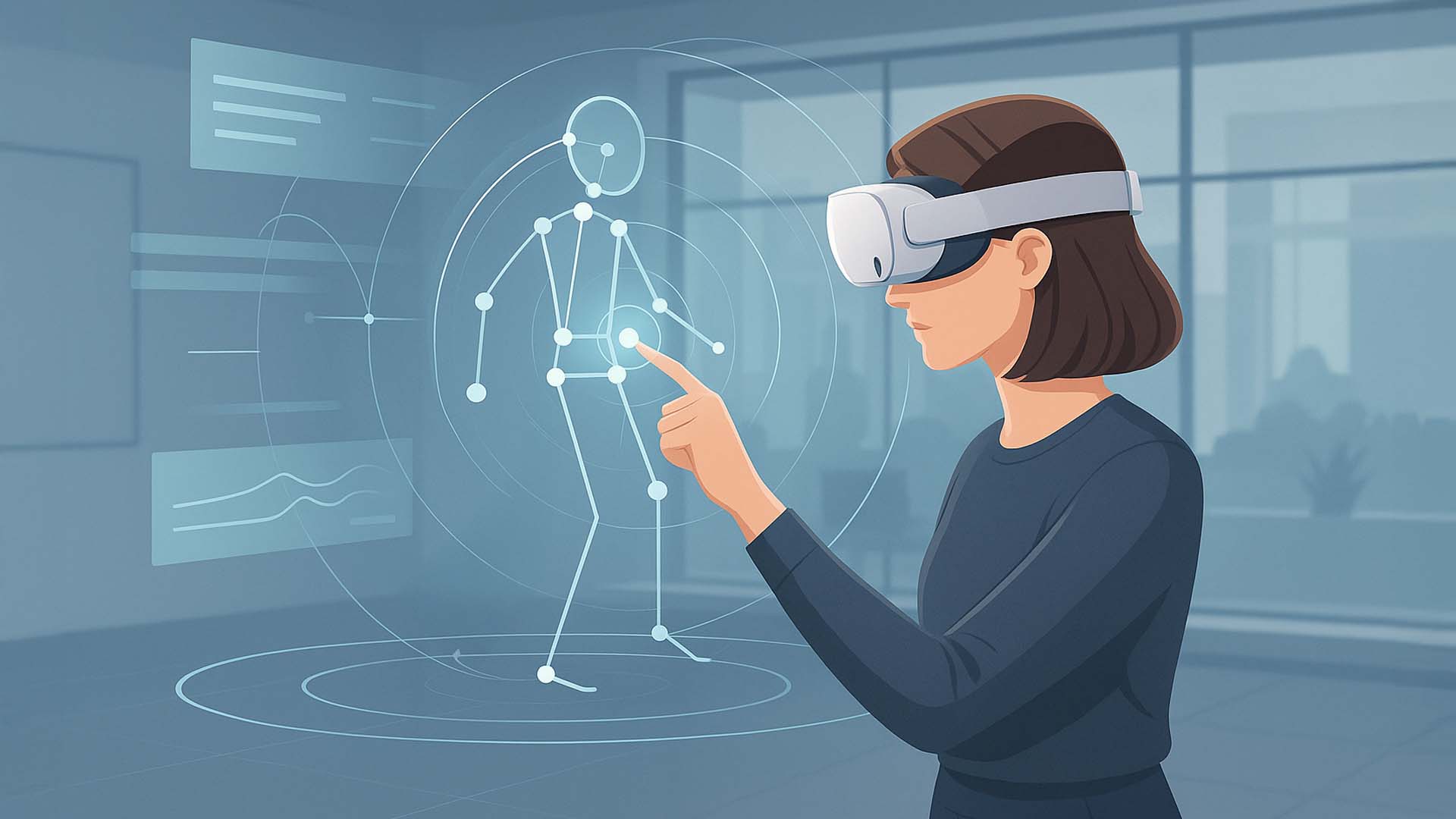

Image: Body movement as a control signal – machines react to intent instead of button presses. Visualization: Visoric Research 2025

Research shows that people learn up to 40 percent faster with motion-based interfaces and retain procedures more effectively. The reason: motor skills, perception, and cognition interact more closely. Unlike abstract menus, physical action becomes direct experience. This creates a new quality of control – one defined not by technology but by human naturalness.

This insight shapes the next stage of development: learning through experience. Instead of explaining processes, they are made tangible. The next chapter shows what this looks like in practice.

Learning Through Experience

Knowledge doesn’t stay in the mind when it’s only read – it stays when it’s experienced. This insight from learning psychology underpins a new generation of training systems based on immersive technologies. With motion pads, headsets, and sensors, complex workflows can now be realistically simulated without risking machinery, materials, or safety. Learners stand in the middle of the process, see causes and effects, and grasp relationships that would otherwise remain abstract on paper.

Companies use this form of learning to transfer expertise faster and more sustainably. Instead of PowerPoint and manuals, experience takes center stage. Mistakes are allowed because they have no consequences – and this is precisely how competence develops. Those who perform maintenance virtually or train an assembly process in a digital twin understand the details before executing them in reality.

The combination of movement, real-time feedback, and AI analysis demonstrably increases learning outcomes. Systems detect uncertainty, offer guidance, and measure progress. Abstract knowledge turns into experience, experience into skill. Meanwhile, costs and risks decrease because training no longer depends on real machines.

- Virtual training replaces dry theory with tangible practice

- Errors are analyzed, not punished – learning happens through doing

- Immersive simulations enhance safety, quality, and motivation

Image: Virtual training with real benefits – simulation replaces theory with experience. Concept visualization: Visoric XR Lab 2025

What once required extensive in-person sessions can now take place flexibly and at scale in XR environments. Whether machine operation, safety instruction, or service deployment – learners immerse themselves in adaptive scenarios that provide personalized feedback.

The next chapter explores how these experiences connect with real data and how information appears exactly where it’s needed – within the space itself.

Understanding Data in Space

What used to be numbers on monitors is now part of the environment. With spatial computing, AI, and modern 3D engines, production data, energy flows, or safety information can be brought to where they originate – into the physical workspace. This creates a new kind of perception: data becomes visible, tangible, and intuitively understandable.

In practice, this means that sensor values, machine states, or process progress appear as spatial overlays. Instead of reading abstract dashboards, employees see directly at the machine which components require maintenance or where deviations occur. This reduces search time, lowers error rates, and fosters shared understanding between engineering, planning, and execution.

The key lies in combining real-time data with spatial context intelligence. Systems interpret position, gaze direction, and user movement to display information where it matters. At the same time, AI can recognize data patterns and generate actionable recommendations – such as warnings of overheating, quality deviations, or process optimization suggestions. Abstract analysis becomes a direct visual experience.

- Sensor and machine data become visible directly in the workspace

- Spatial overlays make progress, risk, and quality intuitively perceptible

- AI detects patterns and provides real-time recommendations

Image: Making real data visible – spatial computing connects information and movement. Visualization: Visoric GmbH 2025

This form of visualization transforms not only operation but also communication. Teams share the same data context, whether on-site or remote. Decisions become faster because information is spatially anchored and thus more understandable. Transparency leads to trust.

The next chapter focuses on the human factor: how virtual spaces are becoming new platforms for collaboration and how mixed reality is transforming meetings and projects.

Collaboration in Virtual Space

Work no longer happens in a single location. Teams are distributed, projects are global, yet people must collaborate on the same models, processes, and ideas. Virtual and mixed realities create new spaces for this: environments that feel tangible though they are digital. Engineers, designers, and technicians can interact together – not through mouse and keyboard, but within the three-dimensional scene itself.

Mixed reality meetings are not a replacement for physical encounters but a logical extension. Seeing and manipulating a full-scale model together creates collaboration on a new level. Gestures become tools, presence becomes communication. Instead of explaining what they mean, participants literally reach into the same object. This builds understanding, accelerates alignment, and strengthens shared responsibility for results.

This technology also redefines the relationship between humans and machines. AI-based avatars and assistants are no longer mere observers but active team members. They log changes, suggest solutions, and visualize alternatives in real time. The boundaries between physical and digital workplaces blur – and with them, the limits of creativity.

- Virtual spaces connect teams across locations, languages, and time zones

- 3D models are collaboratively edited, reviewed, and understood

- AI assistants support communication, planning, and documentation

Image: Teamwork without borders – mixed reality connects people across locations. Visualization: Visoric Collab Studio 2025

This creates workplaces where proximity is no longer tied to distance. Ideas can be reviewed faster, changes experienced together, and decisions made with greater confidence. At the same time, people feel more connected – even when separated by thousands of kilometers.

The next chapter makes this new form of experience measurable. It shows how AI and sensors analyze movements, identify patterns, and derive insights for safety, efficiency, and learning.

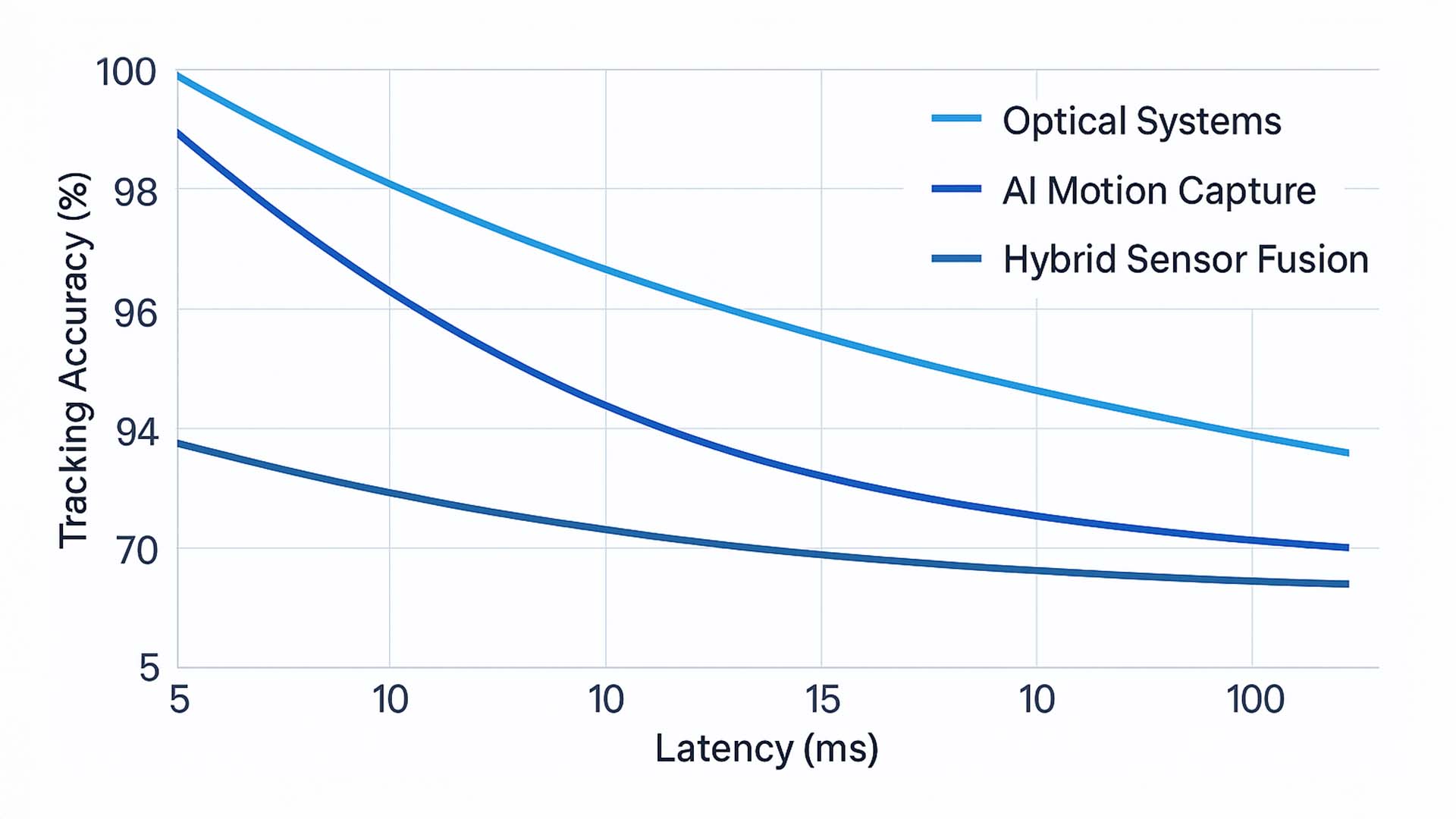

Scientific Insights into Motion Tracking

The foundation of these new motion systems, originating in gaming, lies in the precise measurement of human movement in real time. Whether in manufacturing, training, or collaborative robotics, system quality depends on two factors: accuracy and latency. The lower the latency, the more immediate the response; the higher the accuracy, the more realistic the interaction.

- Combining optical, sensory, and AI-driven methods

- Simulation of motion sequences with millisecond reaction times

- Application in training, production, and ergonomic optimization

To illustrate these relationships, the following chart shows the correlation between latency and accuracy across different motion-tracking systems. Hybrid Sensor Fusion systems – combining optical and AI-driven methods – achieve the best balance between response time and precision.

Chart 1: Real-time accuracy compared to latency across motion-tracking systems.

Source: MIT CSAIL (2024), IEEE TVCG (2022), Meta Reality Labs (2023), Siemens Digital Industries (2024), Unity Research Labs (2023)

The results show that purely optical systems offer high precision but suffer higher delays during fast or complex motions. AI-based systems respond faster but depend on large datasets. The most effective approach combines both – AI intelligently evaluating sensor data while optical systems provide detailed visuals.

The second part of the analysis compares three AI model types for motion pattern recognition: classical convolutional neural networks (CNNs), modern transformer models, and advanced hybrid AI systems. CNNs detect local patterns in images, transformers capture temporal dependencies across movement sequences, and hybrid models combine visual data with sensor inputs from pads or cameras.

Chart 2: Recognition accuracy of different AI model types in motion analysis.

Source: MIT CSAIL (2024), IEEE TVCG (2022), Meta Reality Labs (2023), Siemens Digital Industries (2024)

The analysis shows that hybrid models achieve the highest precision with a recognition rate of around 94 percent. They leverage the strengths of both visual and physical data, enabling new applications from preventive safety to adaptive control in industrial production.

From Vision to Application

What may look like a game is actually the beginning of a new industrial reality. Technologies developed for entertainment – motion tracking, realistic physics, adaptive simulations – now form the foundation for training, production, and design. The following video illustrates this convergence: when game mechanics become work logic and entertainment transforms into precision.

Video: From the game console to industry – high-tech meets practice. Production: Visoric XR Studio 2025

The video highlights that this is not just about technology but about a new mindset: movement as control, experience as knowledge, space as interface. These principles are transforming how we learn, design, and interact with complex systems in real time.

Companies that embrace gaming technologies in their workflows are investing in more than efficiency – they are investing in understanding, safety, and future readiness. Those who learn playfully act more effectively in reality.

- Interactive simulations enable safe learning and agile development

- XR technologies from gaming bring dynamism to training and production

- Practice-driven integration creates measurable value for people and processes

If you are considering how these technologies could be used in your organization – whether for training, manufacturing, or interactive presentations – talk to the Visoric expert team in Munich. Together, we develop solutions that make innovation tangible where it matters most: with people.

Expertise for the Future

The fusion of gaming, XR, and industry is no longer a distant trend – it is happening now, tangibly and with economic impact. Companies that begin integrating these technologies early gain not only efficiency but also a strategic advantage in thinking and action. However, this path requires experience, technological understanding, and a sense for the human who interacts with these systems.

The Munich-based Visoric expert team guides companies along this path – from idea to implementation. In interdisciplinary projects, our specialists connect technological innovation with operational reality. The results are not experimental but productive – measurable in benefit and safe in use.

- Consulting and implementation for XR, AI, and real-time visualization

- Integration of gaming technologies into industrial, service, and training processes

- Development of customized showcases, training, and presentation solutions

Image: The Visoric expert team in Munich turns gaming into future industrial technology. © Visoric GmbH 2025

Source: Visoric GmbH | Munich 2025

Those who bring technologies from play into reality transform not only workflows but also how people interact with machines, data, and knowledge. If you want to know what this transformation could look like in your company, contact us – the Visoric team will show you what is already possible today.

Contact Us:

Email: info@xrstager.com

Phone: +49 89 21552678

Contact Persons:

Ulrich Buckenlei (Creative Director)

Mobil +49 152 53532871

Mail: ulrich.buckenlei@xrstager.com

Nataliya Daniltseva (Projekt Manager)

Mobil + 49 176 72805705

Mail: nataliya.daniltseva@xrstager.com

Address:

VISORIC GmbH

Bayerstraße 13

D-80335 Munich