Programmable Reality. The new era of immersive out of home experiences

An immersive LED installation in an urban environment. Digital waves break out of the display and merge with light and fog to form a scene that appears real.

Visualization: © Ulrich Buckenlei | XR Stager Magazine 2025

When digital stories enter the urban space

Entirely new forms of visual communication are currently emerging in urban environments. The classic LED wall is evolving into a stage where digital content no longer stays inside the screen but extends into the physical surroundings. The principle of Programmable Reality combines animation, water, light, fog and sound into a coordinated choreography that produces real reactions and becomes a directly perceptible experience.

As an industry analyst I observe how this immersive out of home technology is developing into an independent medium. Cities are becoming spaces that can be narrated, brand stagings turn into spatial moments and pedestrians become participants in a real time story. At the intersection of LED architecture, real time control and physical effects a format is emerging that goes far beyond traditional outdoor advertising and transforms public space into a narrative environment.

It is becoming increasingly clear that the question is not whether digital and physical layers will merge but how precisely they can be aligned. Installations that link water impacts, atmospheric fog or dynamic light sequences with visual content down to the millisecond show how pure projection becomes a spatial event. Programmable Reality describes exactly this new quality: a narrative layer that begins digitally and continues physically.

- Digital content becomes spatial → Animations generate real reactions in urban space

- Precise choreography → Water, light, fog and sound follow the visual timing

- New experiential layer → Out of home becomes an immersive medium that can be physically felt

After this first impression the question arises as to how such an installation is technically structured. The impact is not accidental but created through the precise interaction of digital content and physical effects. To understand how Programmable Reality works it is worth taking a look at the individual layers that make such a scene possible in the first place.

How Programmable Reality works: the technical layers behind the illusion

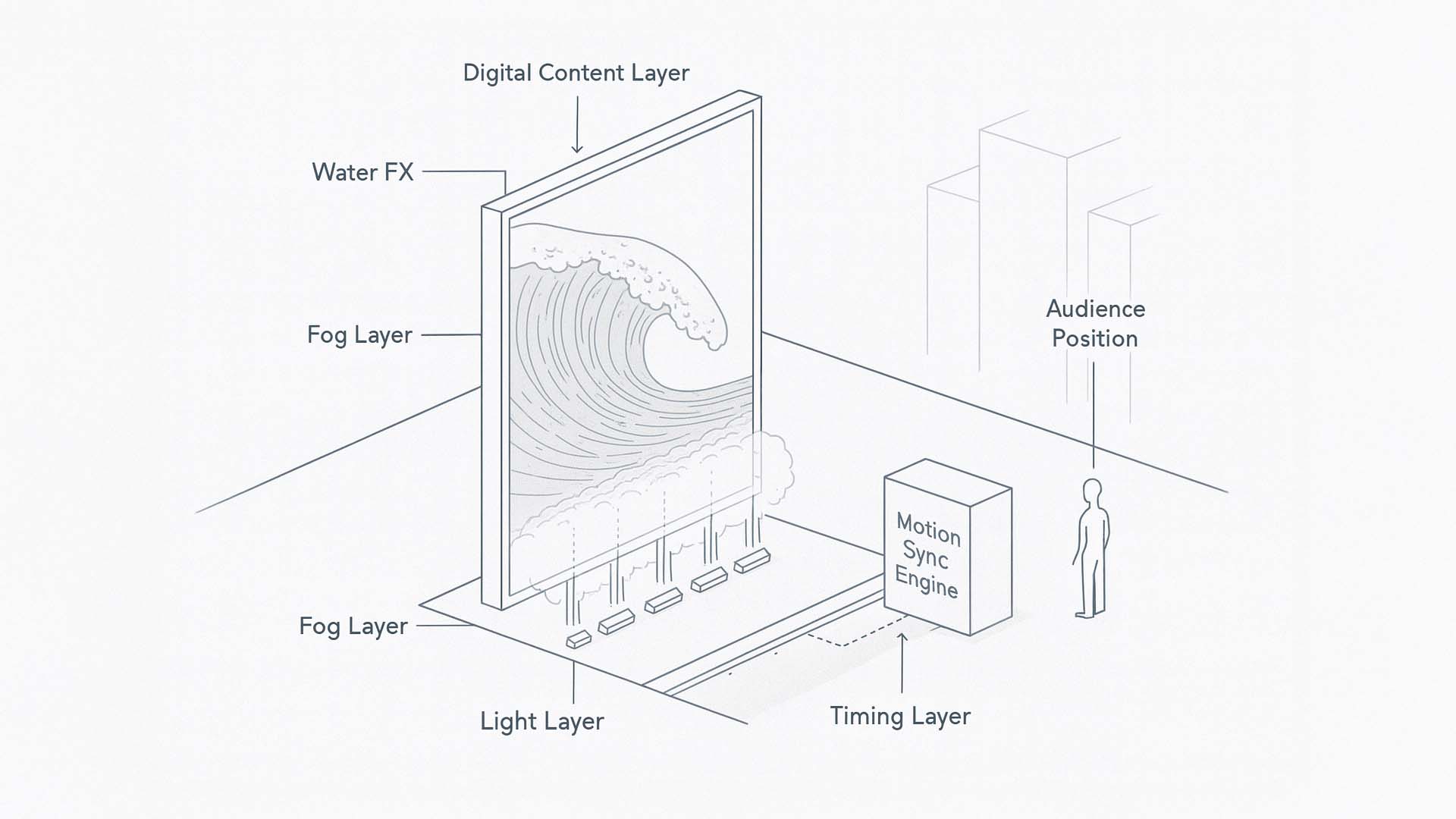

Programmable Reality does not rely on a single effect. The impact only emerges when digital content, physical effects and precise control synchronization merge. The scene shown in the first image illustrates exactly this combination. An LED screen forms the visual layer while water, fog and light are placed as real physical layers in front of it. Both worlds interact in real time and transform a static façade into a dynamically responsive experience. For this immersive layering to work multiple technical systems must operate together within milliseconds.

The following visualization shows the central building blocks of such a system and illustrates how digital and physical elements merge into a single narrative surface.

Technical function graphic: digital content, water, fog, light and motion sync merge into a single immersive experience system.

Visualization: © Ulrich Buckenlei | XR Stager Magazine 2025

The graphic shows the functional layers of the system. At the center is the large LED screen which serves as the starting point for all reactions. The content determines the wave shape, movement energy and dramaturgical flow of the scene. These motion patterns are analyzed in real time by the Motion Sync Engine and passed as control information to the physical effect modules.

- Digital Content Layer → The LED screen creates the visual narrative. Water waves, color gradients or rhythmic animations define the impulses that will later be implemented physically.

- Real Water FX → Directed water jets are positioned in front of the screen. They respond to movement, energy and rhythm of the digital content and break out of the flat display into real space.

- Atmospheric Fog Layer → Fog forms the volumetric layer between screen and street. It acts as a three dimensional carrier for light, color and movement and connects digital and physical spatial layers.

- Reactive Light Layer → Light sources in the environment modulate intensity and color depending on the LED content. This extends the digital scene beyond the screen into the architecture and public space.

- Motion Sync Engine → The central control layer. It detects patterns, motion impulses and key events in the screen content and translates them into precise physical effects. It is the heart of Programmable Reality.

- Timed FX Controller → A timing module ensures that water bursts, fog pulses and light impulses match the animation down to the millisecond. The effect only works when everything reacts at the same moment.

- Audience Perspective → The observer’s position is part of the planning. The physical depth only emerges from the angle in which screen, water and fog interact.

In combination these building blocks create an immersive system that no longer separates digital and physical. The LED screen provides the narrative lead. The water jets convert movement energy into real force. The fog adds spatial depth and binds light, color and materiality. The light layers carry emotion beyond the visible frame. The entire choreography is orchestrated by the Motion Sync Engine which instantly translates every change in the image into physical signals.

Programmable Reality represents a new stage of development: public spaces become narrative environments that do not just display content but react to it. The boundary between scene and city dissolves and digital stories extend into the real world.

After clarifying the technical layers the next question is which component controls the entire installation. The next chapter focuses on the Motion Sync Engine and its role as the central system that detects visual impulses and translates them into precise physical reactions.

The Motion Sync Engine as the central control system of immersive installations

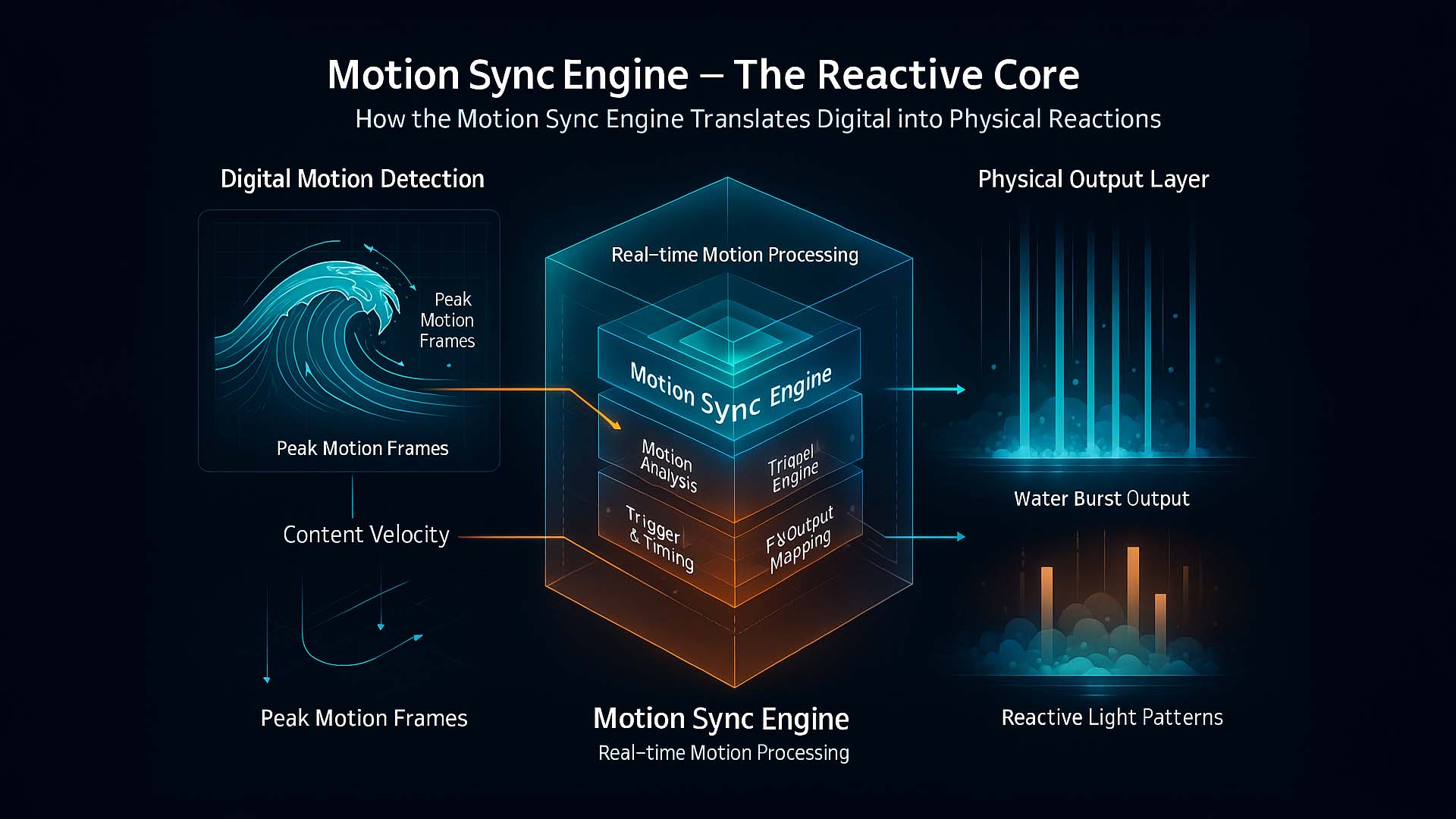

For digital images and physical effects to merge into a single fluid experience there must be a system that not only detects visual impulses but immediately translates them into real movement. The Motion Sync Engine fulfills exactly this role. It is the analytical center of the installation and reacts the moment a dynamic image section changes on the LED screen. Every wave, every color intensification and every motion impulse becomes a signal that can be controlled and that expands the real space. It makes tangible how closely digital and physical layers can be connected.

The following visualization shows how this process is structured. On the left you see the digital origin of the scene. The wave structure is broken down into motion data describing speed, direction and intensity. These motion frames flow into the center where the Motion Sync Engine performs its analysis. It identifies active image regions, calculates patterns and decides which effects are triggered at which moment. On the right you see the physical layer in which water bursts, fog and light react to the previously processed information. The graphic illustrates how a two dimensional movement becomes a spatial and physical experience.

Motion Sync Engine: the technical function graphic shows how digital movement is detected, analyzed and translated into precise physical effects.

Visualization: © Ulrich Buckenlei | XR Stager Magazine 2025

The visualization shows only part of the process. Behind the engine continuous calculations ensure that effects do not trigger too early, too late or with excessive intensity. The engine monitors the overall dynamics of the scene and ensures that water, fog and light do not behave in isolation but as a coordinated unit. This keeps the installation stable, believable and repeatable while maintaining its lively character.

- Digital Motion Frames → Motion data of the LED content is broken down into speed, direction and intensity.

- Motion Analysis Core → The engine detects patterns and prioritizes the most dynamic areas of visual movement.

- Trigger and Timing Module → This module calculates precise trigger moments so that water, fog and light react simultaneously.

- Output Mapping → The processed impulses are assigned to the respective effect modules and translated into real world actions.

- Physical FX Layer → Water bursts, fog layers and light moods create a physical extension of the digital scene.

A crucial aspect is that the Motion Sync Engine does not rely on pre rendered sequences. It reacts live to the digital image. Every movement on the screen is immediately translated into an action in the space. This creates an experience that feels organic but remains fully controlled. This real time connection combined with precision enables the illusion and demonstrates why Programmable Reality creates a new experiential quality.

The next chapter focuses on the system level behind this real time capability. It shows how various effect modules, sensors and control devices communicate and why their integration is essential for immersive installations that blend seamlessly into the real world.

Real time system architecture: how all components become one integrated system

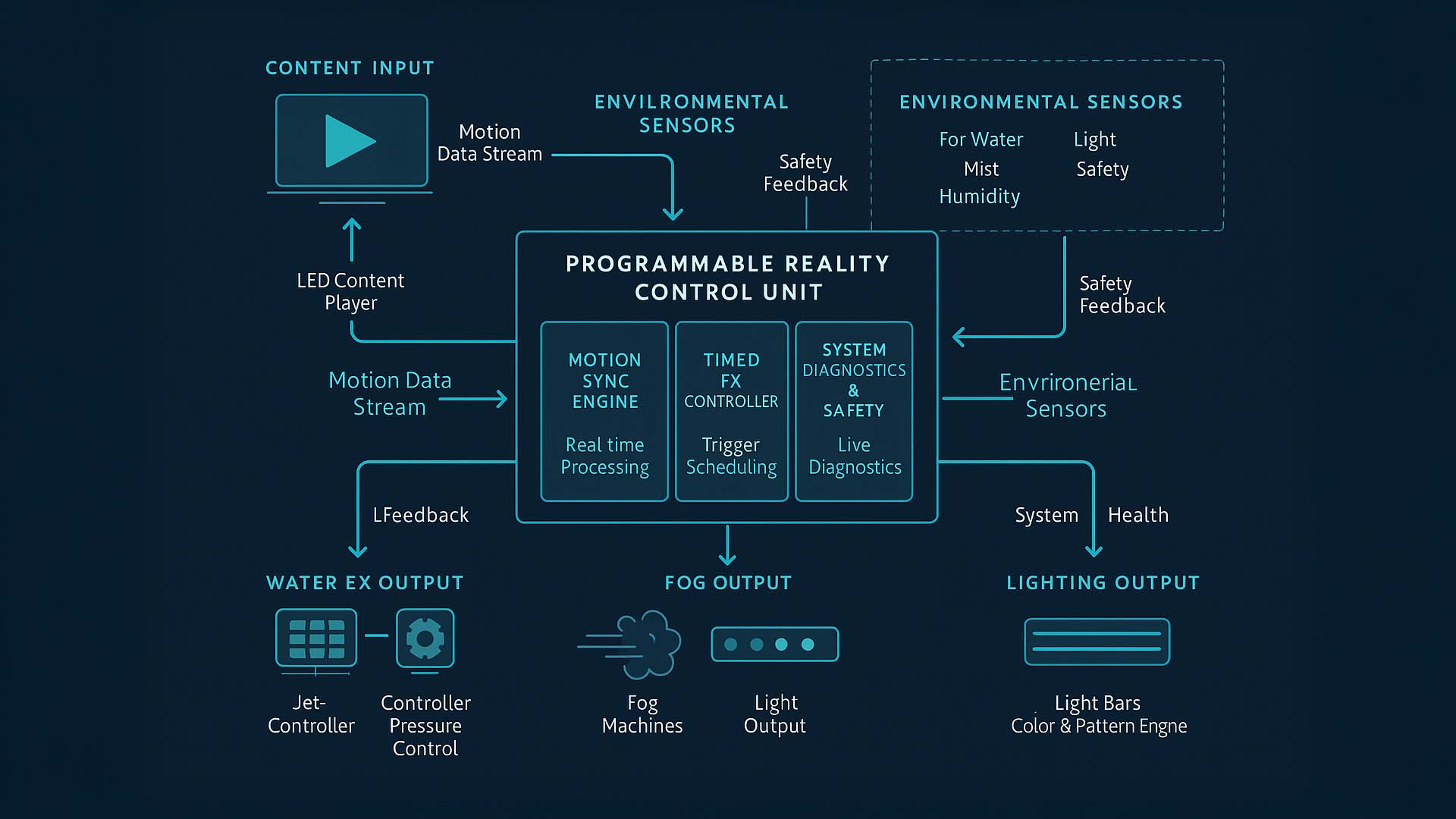

For an immersive installation to function reliably not just for moments but for hours and days it needs more than a strong visual idea and a precise Motion Sync Engine. Behind the scenes a system architecture connects content, effect modules, sensors and safety logic. It ensures that every movement on the LED screen, every water jet, every layer of fog and every light impulse operates within a consistent control loop. Only this system layer transforms individual effects into a robust experience suitable for operation in public spaces.

The following visualization shows this architecture in three layers. The path begins at the top with the Content Input. An LED content player delivers the visual content, from which a Motion Data Stream is generated. In parallel Environmental Sensors capture environmental values for water, fog, humidity, light and safety. Both data streams flow into the middle layer the Programmable Reality Control Unit. Three central modules work together in this core block: the Motion Sync Engine handles real time analysis, the Timed FX Controller plans the effect timing and the System Diagnostics and Safety layer monitors the system state with continuous diagnostics. From this unit the effects are sent to the physical layer.

Programmable Reality System Architecture: content input, control unit and physical outputs form a closed real time loop for water, fog and light.

Visualization: © Ulrich Buckenlei | XR Stager Magazine 2025

In the lower part of the graphic the physical outputs become visible. On the left is the Water FX Output controlled via a jet controller and pressure control that regulate pressure and patterns of the water bursts. In the center is the Fog Output coordinating fog machines and light output so that volume, density and visibility match the visual content. On the right is the Lighting Output where light strips and a color and pattern engine ensure that colors, brightness and patterns are precisely aligned with the digital scene. From all outputs feedback lines return to the control unit reporting system health and sensor data. This creates a closed loop in which every action receives a corresponding response.

- Content Input → An LED content player delivers the visual material and generates a continuous motion data stream.

- Environmental Sensors → Sensors monitor water, fog, humidity, light and safety parameters and send safety feedback to the control unit.

- Programmable Reality Control Unit → The central control unit integrates Motion Sync Engine, Timed FX Controller and System Diagnostics and Safety.

- Motion Sync Engine → Analyzes motion data in real time and decides which effects to trigger at what moment.

- Timed FX Controller → Plans trigger timing and ensures that physical effects run in a structured and reproducible way.

- System Diagnostics and Safety → Monitors the state of all modules and ensures that the installation operates within defined safety thresholds.

- Water FX Output → Jet controller and pressure control convert digital motion impulses into directed water bursts.

- Fog Output → Fog machines and light output create an atmospheric volume that expands the scene spatially.

- Lighting Output → Light strips and a color and pattern engine extend the digital story into the surrounding space.

What the graphic only hints at becomes the decisive success factor in practice. The control unit not only synchronizes effects but also regulates boundaries: how strong water pressure may rise, how dense fog may become, which light intensity makes sense under various environmental conditions. The installation becomes an adaptive system that balances creative freedom with technical responsibility. Only when this system layer operates reliably can Programmable Reality work in everyday public environments and integrate seamlessly into real space.

The next chapter shifts the focus from technology to application. It explores how brands, cities and operators can use these systems to activate public spaces through storytelling and what new forms of spatial narrative emerge.

How brands and cities transform public spaces into narrative experiences

Programmable Reality changes not only the technical structure of out of home installations but also their meaning. More and more brands, cities and operators use these systems not just to show content but to activate spaces. When digital scenes extend physically into urban environments a new form of storytelling emerges that is not only seen but felt. People stop, interact and interpret. The installation becomes a stage and the urban space becomes a medium.

The following visualization shows this idea: a large scale LED installation projects a luminous astronomical scene into an urban environment. Fog on the ground extends the digital imagery into the real space while reflective surfaces in the surroundings capture the light and envelop pedestrians. The interplay of digital story, atmospheric effects and real architecture creates a spatial narrative that goes far beyond traditional outdoor advertising.

Immersive Urban Activation: Digital content, ambient light and atmospheric effects merge into a spatial experience that immediately involves passersby.

Visualization: © Ulrich Buckenlei | XR Stager Magazine 2025

Such scenes show how public places can be transformed into narrative spaces. A brand no longer tells a linear story but a spatial one. Cities create atmospheric accents that express culture, innovation and identity. Operators discover a new format that sits between art, interaction and information. The installation becomes not just a medium but a social experience.

- Urban storytelling → Squares, façades and pathways become narrative surfaces that let digital content operate spatially.

- Brand experiences in space → Companies create not only visibility but physically perceivable scenes that attract people and invite them to stay.

- Cultural activation → Cities use immersive installations to enrich public areas atmospherically and create shared experiences.

- Spatial interaction → Passersby become part of the narrative and influence the perception of the moment through their presence.

- New formats for OOH → Out of home becomes an experiential surface that extends beyond digital screens and gains physical depth.

This new form of spatial design shows how quickly the boundaries between digital and physical experiences dissolve. The next step is to explore how such scenes can be captured cinematically and the role of video in documenting and spreading immersive installations.

The experience in moving images: how the installation unfolds on film

Immersive installations unfold their full effect only when experienced in motion. Water bursts, light impulses, fog plumes and digital content respond precisely to each other creating a dynamic that cannot be fully captured in a still image. The following video shows the installation in real time and makes visible how the individual layers work together.

Original installation: Disney Immersive Billboard.

Base footage: Public social media source. Scripting, narration and graphics: Ulrich Buckenlei.

The video documents the moment when digital animation, physical effects and spatial behavior merge into a single narrative. It shows how precisely the Motion Sync Engine operates and how spatially tangible Programmable Reality becomes in an urban environment.

Talk to the experts: how immersive technologies can be used effectively

Immersive technologies are changing how brands communicate, how cities design their spaces and how people experience environments. Many organizations are at a point where they recognize potential but still seek orientation. Whether it is technical frameworks, creative approaches or the feasibility of complex installations, expert exchange often brings decisive clarity.

Visoric: your expert team for immersive technologies and digital transformation.

Visualization: © Ulrich Buckenlei | Visoric Newsroom Munich

The Visoric expert team in Munich supports organizations in planning, evaluating and implementing immersive solutions in meaningful ways. Joint conversations lead to clear pathways, realistic roadmaps and often entirely new ideas that were not visible before. If you are considering how immersive systems might support your goals we would be pleased to speak with you.

- Strategic assessment → How immersive technologies create value and where they can be used effectively.

- Technical guidance → Which systems, interfaces and data flows are required.

- Feasibility and implementation → What is achievable and how projects can be planned efficiently.

We warmly invite you to connect with us and explore new perspectives for your brand, company or city.

Contact Us:

Email: info@xrstager.com

Phone: +49 89 21552678

Contact Persons:

Ulrich Buckenlei (Creative Director)

Mobil +49 152 53532871

Mail: ulrich.buckenlei@xrstager.com

Nataliya Daniltseva (Projekt Manager)

Mobil + 49 176 72805705

Mail: nataliya.daniltseva@xrstager.com

Address:

VISORIC GmbH

Bayerstraße 13

D-80335 Munich